Simplifying the Thermal Qualification Process with Direct Liquid Cooling for Data Center Servers

Thermal qualification for data centers is a process by which the test team validates a system's thermal performance to ensure safe operating temperatures under multiple test conditions. This is done to model real-world use cases such as workloads from AI or HPC in various cooling configurations. This ensures optimal performance and reliable operation prior to the systems going into production. To give the reader a real-world example, we’ll describe how we tested the liquid cooled Penguin Computing Altus® server using both water and air cooling.

HPC and data center operators should understand that thermal qualification is straightforward and easily manageable. Chilldyne’s engineers have been conducting thermal tests on electronics for decades. It takes one engineer about three weeks to perform this testing. Chilldyne’s negative pressure system makes it easy to test with beta hardware because a leak doesn’t cause any damage.

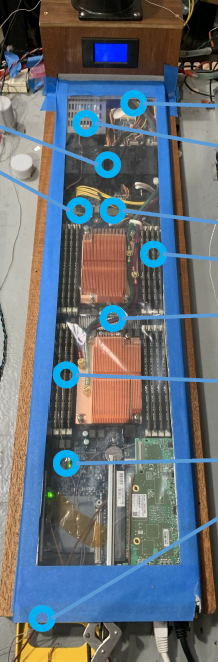

Figure 1. Chilldyne hybrid liquid-air heat sinks (cold plates) and prototype DIMM coolers were installed in Penguin Computing’s Altus XO1232g server.

Real-World Example

We conducted thermal performance testing on a high-density Penguin Computing OCP server (Product Link) equipped with two 64-core AMD EPYC 7742 processors, which we modified to include hybrid cold plates capable of both liquid and air cooling (as a backup). Using air cooling as a back-up mitigates risk of downtime.

This report describes a process to quantify critical metrics like the heat capture ratio and to verify safe limits for all components under load. We monitored variables such as CPU temperatures, frequencies, and power draw. We added temperature sensors to various components, ensuring expensive computing hardware does not overheat. Infrared cameras were used to confirm measurements and identify any unanticipated hot spots. We measured the coolant flow and temperature change (DeltaT) to understand how much heat was being extracted by the water. We measured the air flow rate to and DeltaT to determine the amount of heat in the air. We captured the server power input to calculate the heat capture ratio.

The heat capture ratio is crucial as it enables data center operators to provision the correct amount of air and liquid cooling for the data center. In some cases, the heat capture ratio is less than expected, resulting in excessive hot aisle temperatures and overloaded air-cooling system. The heat capture ratio is primarily a function of server design.

For each test variation, the following steps were performed to ensure proper measurements:

- Start the data acquisition system (to capture temperatures and analog readings).

- Start S-Tui or CoreTemp (to capture CPU temperatures and frequencies).

- Start Linpack Xtreme or another CPU burn program (to stress the system).

- Wait until the temperature stabilizes and take an IR photo of the server.

- Save the data acquisition data and the server reported data. Use the same measurement interval to make is it easy to plot.

Figure 2: Light blue circles indicate sensor locations (e.g., thermocouples).

Test Results

1) Reduced power consumption under liquid cooling

The server’s power consumption was reduced by just under 12% using liquid cooling. One of the major factors in reduced power consumption between air-cooled and liquid-cooled use cases is attributable to the fans, which were observed to be running at a much higher speed using air cooling and near idle under liquid cooling. The CPU or GPU will also use 3-10% less power due to lower leakage current.

2) Maintained safe thermal limits across all tested components

Testing showed that other components remained within safe operating thermal limits even with reduced fan speeds under liquid cooling. The BIOS controls fan speed based on CPU and other temperatures. When the server is under load and liquid cooled, the fans will run slowly. If other components exceed temperature specifications, the BIOS may need adjustment. BIOS fan speed adjustment was not necessary for this server.

3) Quantified heat capture ratio and tested with DIMM cooling

Under load, the system captured 56% of the server's total power usage or 95% of the combined power of the CPUs. To further improve this heat capture ratio, additional cooling technologies may be required, such as direct-to-chip RAM cooling. (A supplemental test of prototype DIMM cooling yielded an additional 8 percentage points of heat capture.)

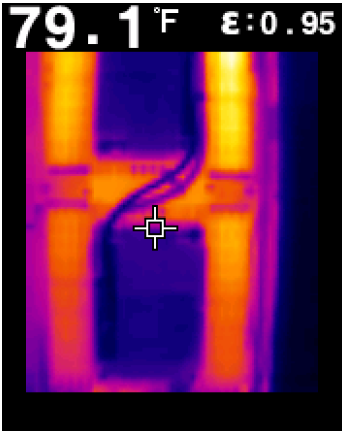

Figure 3. An infrared image of the server's two CPU heat sinks.

Note the contrast between the liquid-cooled heat sinks in blue and the air-cooled DIMMs.

Tips for Thermal Testing

When designing for liquid cooling, it is not enough to say that you have, for example, 10 each 60 kW racks. You will need to estimate the heat capture ratio in order to provision the air and liquid cooling. In this case, an air-cooled data center would need 600 kW (171 tons) of air cooling. If the heat capture ratio is 80% (as it is in Sandia Manzano supercomputer with similar Penguin Intel servers), the air-cooling system would need to capture 34 tons and the liquid cooling system would capture 137 tons. If on the other hand, an AMD server with a 56% heat capture ratio in the same set of racks would need 75 and 95 tons or air and liquid cooling, respectively. So, you might need twice as much air cooling for the same set of 60 kW racks, depending on the CPU choice.

Your liquid cooling and server vendors will work together to perform thermal qualification testing, considering practical applications and different use cases. ASHRAE is developing liquid cooling specifications for server vendors so that you can match your servers to your facility water system.

If this seems complex, talk with Chilldyne; we can help you better understand this process. Given some server and local climate information, we can let you know inlet and outlet water and air temperatures, PUE, air and liquid cooling loads, etc. We will let you know if there are any issues with your design beforehand, so you can successfully execute your project.

Planning for Your Next Liquid Cooling Project

As the next generation of more powerful chips is upon us, adopting liquid cooling will become necessary prerequisite for HPC data centers. Liquid cooling will be required to support the massively increasing compute density driven by the advanced chips supporting AI/ML applications. Further benefits include reduced power consumption, a lower total cost of ownership and reduced carbon emissions for improved sustainability.

When preparing your current or future data centers for liquid cooling, feel free to reach out and leverage our hands-on experience. At Chilldyne, we have successfully qualified a wide range of servers and configurations for liquid cooling over the past decade and have a fine-tuned process. Our Chilldyne deployments have never leaked, have worked as predicted and provide a reliable, resilient and cost effective negative-pressure liquid cooling solutions. Chilldyne's team of experts is ready to guide you through every step of your liquid cooling journey, including thermal qualification planning and testing, to ensure your data center is prepared to meet the demands of tomorrow's computing needs.